Artist(s):

· Jianhao ZhengSchool Information:

Artwork Information:

Description:

In Direction: Earthbound, the artist operates an imaginary vehicle running on the track of distance, taking passengers flying outwards to imagine distant perspectives.

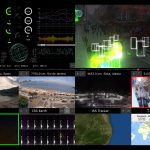

This multimedia performance harnesses live-streamed surveillance feeds and artificial intelligence to craft an evolving audiovisual landscape. As the perspective shifts outward, the work becomes increasingly fractured and distorted – a powerful metaphor for the paradoxical nature of digital connectivity.

As a final destination, the vehicle reaches space, with the perspective furthest from our everyday life. All the different places, times, sunrises and sunsets, all happen simultaneously on this tiny blue sphere wandering in the vast and empty cosmos. The distance on Earth becomes an arbitrary number, and beyond our differences in opinions and stances, we share the same feelings toward the Earth.

This round-trip ends with a one-way sight – the missing descending ride happens within passengers’ minds – drawing the essential connection between the individual and the universally shared perspective among us earthlings.

The artistic intent of this work is rooted in a connected future, in which digital technology allows increased reach, while imagination, empathy, and sincerity bring back genuine human connection.

Technical Information:

In this custom-built TouchDesigner system, multiple live streams from YouTube are the video source. An embedded script triggers searches that return the distance to the streaming locations, and the video feeds are sorted in that order. A physical knob controls the distance, switching the videos accordingly, navigating the whole performance.

Utilizing MediaPipe, a real-time machine learning model for computer vision, the video feeds are analyzed to recognize people and objects. In this system, two sets of data are retrieved from MediaPipe: a) object detection model outputs the identified category and coordinates on screen, and b) image segmentation model outputs a colormap of the areas that are identified as face, hair, skin, cloth, etc. The object data is used to generate geometric shapes, and the segmented colormap is used to create abstract colors and patterns from a video loopback.

The data is translated into sound, and the sound also indicates the changes in raw and processed data. The audio comprises two sets of oscillators – referencing Just Intonation and the Pythagorean Scale. By adjusting the ratio and the base frequencies, the emotions in the sound change dramatically, which is used creatively as an abstract narrative of storytelling.